인공지능/Machine Learning

[ML] Neural Network 예제

유일리

2022. 12. 1. 14:42

import numpy

# scipy.special for the sigmoid function expit()

import scipy.special

#neural network class definition

class neuralNetwork:

#initialise the neural network

def __init__(self, inputnodes, hiddennodes, outputnodes, learningrate):

#set number of nodes in each input, hidden, output layer

self.inodes = inputnodes

self.hnodes = hiddennodes

self.onodes = outputnodes

# link weight matrices, wih & who

# weights inside the arrays are w_i_j , where link is from node i to node j in the next layer

# self.wih = (numpy.random.rand(self.hnodes, self.inodes) - 0.5)

# self.who = (numpy.random.rand(self.onodes, self.hnodes) - 0.5)

self.wih = numpy.random.normal(0.0, pow(self.inodes, -0.5), (self.hnodes, self.inodes))

self.who = numpy.random.normal(0.0, pow(self.hnodes, -0.5), (self.onodes, self.hnodes))

#learning rate

self.lr = learning_rate

# activation function is the sigmoid function

self.activation_function = lambda x: scipy.special.expit(x)

#train the neural network

def train(self, inputs_list, targets_list):

# convert inputs list into 2d arrays

inputs = numpy.array(inputs_list, ndmin = 2).T

targets = numpy.array(targets_list, ndmin = 2).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(self.wih, inputs)

# calculate the signals emerging from hidden layer

hidden_outputs = self.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(self.who, hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs = self.activation_function(final_inputs)

# error is the (target - actual)

output_errors = targets - final_outputs

# hidden layer error is the output_errors, split by weights, recombined at hidden nodes

hidden_errors = numpy.dot(self.who.T, output_errors)

# update the weights for the links between the hidden & output layers

self.who += self.lr * numpy.dot((output_errors*final_outputs*(1.0-final_outputs)), numpy.transpose(hidden_outputs))

# update the weights for the links between the input & hidden layers

self.wih += self.lr * numpy.dot((hidden_errors*hidden_outputs*(1.0-hidden_outputs)), numpy.transpose(inputs))

return final_outputs

#return output_errors

# query the neural network

def query(self, inputs_list):

# convert inputs list into 2d arrays

inputs = numpy.array(inputs_list, ndmin = 2).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(self.wih, inputs)

# calculate the signals emerging from hidden layer

hidden_outputs = self.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(self.who, hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs = self.activation_function(final_inputs)

return final_outputs

#Main code

#number of input, hidden and output nodes

input_nodes = 2

hidden_nodes = 2

output_nodes = 2

#learning rate is 0.3

learning_rate = 0.3

#create instance of neural network

n = neuralNetwork(input_nodes,hidden_nodes,output_nodes, learning_rate)

final_outputs = n.query([0.05,0.10])

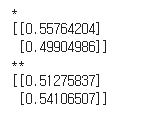

print('*')

print(final_outputs)

for e in range(10):

n.train([0.05,0.10],[0.01,0.99])

print('**')

outputs = n.query([0.05,0.10])

print(outputs)

for e in range(100):

n.train([0.05,0.10],[0.01,0.99])

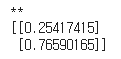

print('**')

outputs = n.query([0.05,0.10])

print(outputs)

for e in range(10000):

n.train([0.05,0.10],[0.01,0.99])

print('**')

outputs = n.query([0.05,0.10])

print(outputs)

https://github.com/erica00j/machinelearning/blob/main/NN1.ipynb

GitHub - erica00j/machinelearning

Contribute to erica00j/machinelearning development by creating an account on GitHub.

github.com